Chapter 2

Reproducibility.

What is nature?

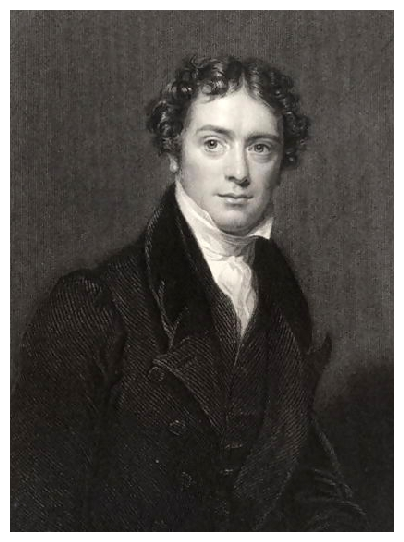

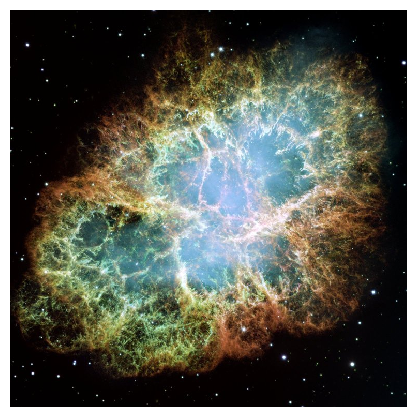

Michael Faraday is a

fascinating character in the history of science. Born the son of a

poor blacksmith, his formal education, such as it was, ended by age

13; but it ended by his becoming apprenticed to a bookbinder and

bookseller; his informal self-education was beginning! This

unconventional background left him knowing practically no

mathematics. But he was an incredibly insightful experimentalist and

he had an intuitive way of understanding the world in mental pictures.

No less than five laws or phenomena of science are named after

him. His experimental discoveries came to dominate the science of

electricity, and of chemistry too, for the first two thirds of the

nineteenth century. His conceptualization of the effects of

electromagnetism in terms of lines of force laid the foundation for

Maxwell's mathematical electromagnetic

equations, and for the modern concept of a "field", in terms of

which much fundamental physics is now expressed.

24

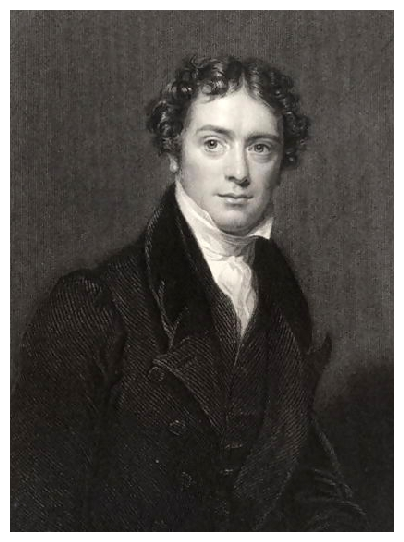

Figure 2.1: Michael Faraday as a young man

It was said of Faraday that whenever he heard of some new result or

phenomenon, reported in a public meeting or a scientific journal,

the first thing he would do was to attempt to reproduce the effect in

his own laboratory. The reason he gave for this insistence was that

his imagination had to be anchored in what he called the "facts". He

understood in his bones that science is concerned with reproducible

phenomena which can be studied anywhere under controlled conditions

and give confirmatory results. "Without experiment I am nothing,"

he once said.

Faraday's attitude is a reflection of what is often taken for granted

in talking about science, that science deals with matters that show

reproducibility. For a phenomenon to be a question of science, it had

to give reproducible results independent of who carried out the

experiment, where, and when. What the Danish Professor

Hans Oersted observed during a lecture demonstration to

advanced students at the university in Copenhagen in the spring of

1820 ought to be observed just the same when Faraday repeated the

experiment later at the

Royal Institution in

London. And it was. Here, by the way, I am alluding to the discovery

that a compass needle is affected by a strong electric current nearby,

demonstrating for the first time the mutual dependence of electricity

and magnetism. According to the students present at his demonstration,

this discovery was an accident during the heating of a fine wire to

incandescence using an electrical current. But Oersted's own reports

claim greater premeditation on his part25.

Figure 2.1: Michael Faraday as a young man

It was said of Faraday that whenever he heard of some new result or

phenomenon, reported in a public meeting or a scientific journal,

the first thing he would do was to attempt to reproduce the effect in

his own laboratory. The reason he gave for this insistence was that

his imagination had to be anchored in what he called the "facts". He

understood in his bones that science is concerned with reproducible

phenomena which can be studied anywhere under controlled conditions

and give confirmatory results. "Without experiment I am nothing,"

he once said.

Faraday's attitude is a reflection of what is often taken for granted

in talking about science, that science deals with matters that show

reproducibility. For a phenomenon to be a question of science, it had

to give reproducible results independent of who carried out the

experiment, where, and when. What the Danish Professor

Hans Oersted observed during a lecture demonstration to

advanced students at the university in Copenhagen in the spring of

1820 ought to be observed just the same when Faraday repeated the

experiment later at the

Royal Institution in

London. And it was. Here, by the way, I am alluding to the discovery

that a compass needle is affected by a strong electric current nearby,

demonstrating for the first time the mutual dependence of electricity

and magnetism. According to the students present at his demonstration,

this discovery was an accident during the heating of a fine wire to

incandescence using an electrical current. But Oersted's own reports

claim greater premeditation on his part25.

2.1 The meaning of experiment

Imagine a family trip to the Australian beach. The

youngster of the family, three-year-old Andrew, is there for the first

time. He is fascinated at all the new experiences. He idly, perhaps

accidentally, kicks the gravel on the way down to the sand, and pauses

to hear it rattle. When seated in the sun he grabs handfuls of sand,

and throws them awkwardly over himself, and anyone else who strays too

near. He is fearful and wondering at the unexpected waves, even the

gentle ones that surge up the smooth sand towards him. Sarah, the

eight-year-old is more deliberate. She is on a trek down the beach to

find treasures: smooth pebbles of special shape or color, sand

dollars, shells, seaweed, and maybe a blue crab. She returns with her

bucket full, and she proceeds to sort her collection carefully into

different kinds and categories. Cynthia watches both with motherly

affection; her gaze shifts to the surf. She delights in the almost

mesmerizing rhythm: rolling in and out. She wonders, at an almost

subconscious level, what makes the waves adopt that particular

tempo. Dan, the husband, chooses a spot well up from the water for

their base, to avoid having to move as the tide comes in. He erects

the sun-shade, trying a number of different rocks till he finds the

ones that best keep it upright in the soft sand. He lies alongside his

wife where the shadow will continue, even as the sun moves in the sky,

to protect his fair skin from excessive ultra-violet radiation caused

by the antarctic ozone hole.

Humans experiment from their earliest conscious moments. They are

fascinated by regularities perfect and imperfect, and by similarities

and distinctions. In children we call this play. In adults it is often

trial and error devoted to a specific purpose, but sometimes it is

simply a fascination that seeks no further end than understanding. We

are creatures who want to know about the regularities of the

world. And the way we find out about them is largely by experiment.

Induction is often touted as the defining

philosophical

method of natural science. It

takes little thought and no detailed philosophical analysis to

recognize that the

deductive logic of

the

syllogism is inadequate for the task of

discovering general facts about the natural world. All boggles are

biggles, no baggles are biggles, therefore no baggles are boggles, is

the stuff of IQ tests, not a way to understand the universe. By

contrast, induction, the generation of universal

laws or

axioms from the observation of

multiple specific instances, is both more fraught with logical

difficulty and also vastly more powerful. But as a practical

procedure it is hardly more than a formalization of the everyday

processes of discovery illustrated by our Australian beach.

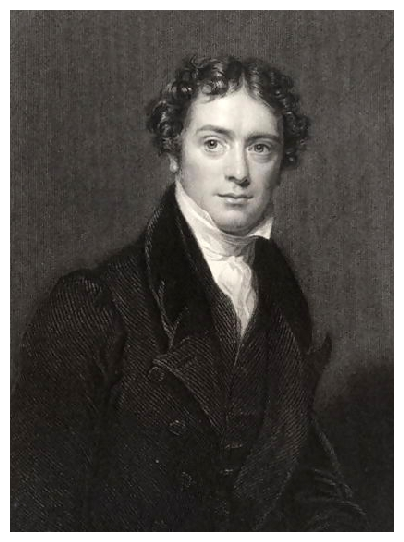

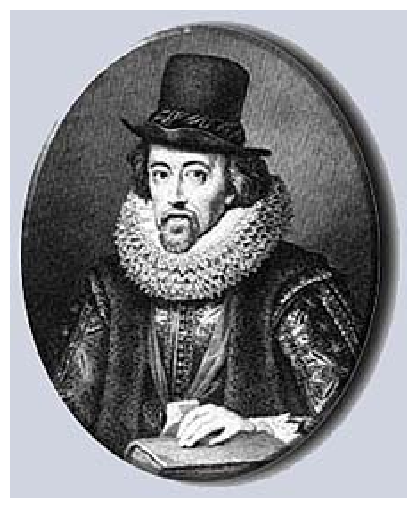

Figure 2.2: Francis Bacon

Francis Bacon (1561-1626) is often credited with

establishing the inductive method as primary in the sciences, and

thereby laying the foundations of modern science. Here is what Thomas

Macaulay, in his (1837) essay thought of that

viewpoint.

Figure 2.2: Francis Bacon

Francis Bacon (1561-1626) is often credited with

establishing the inductive method as primary in the sciences, and

thereby laying the foundations of modern science. Here is what Thomas

Macaulay, in his (1837) essay thought of that

viewpoint.

The vulgar notion about Bacon we take to be this, that he invented a

new method of arriving at truth, which method is called Induction, and

that he detected some fallacy in the syllogistic reasoning which had

been in vogue before his time. This notion is about as well founded as

that of the people who, in the middle ages, imagined that Virgil was a

great conjurer. Many who are far too well-informed to talk such

extravagant nonsense entertain what we think incorrect notions as to

what Bacon really effected in this matter.

The inductive method has been practiced ever since the beginning of

the world by every human being. It is constantly practiced by the most

ignorant clown, by the most thoughtless schoolboy, by the very child

at the breast. That method leads the clown to the conclusion that if

he sows barley he shall not reap wheat. By that method the schoolboy

learns that a cloudy day is the best for catching trout. The very

infant, we imagine, is led by induction to expect milk from his mother

or nurse, and none from his father26.

Bacon thought and claimed that his analysis of Induction provided a

formulation of how to obtain knowledge. That's why he named what is

perhaps his crowning work, the

"New Organon", meaning

it was the replacement for the old "Organon", the collection of

Aristotle's works on logic, which dominated the

thinking of the schoolmen of Bacon's day. Bacon did not invent or even

identify Induction. It had in fact already been identified by

Aristotle himself, as Bacon well knew. Bacon thoroughly analyzed

induction. He offered corrections to the way it was mispracticed,

emphasizing the need for many examples, for caution against jumping to

conclusions, and for considering

counter-examples as importantly as confirmatory

instances. He advocated gathering together tables of such contrasting

instances, almost as if by a process of careful accounting one could

implement a methodology of truth. These methodological admonitions are

interesting and in some cases insightful, but they fall far short of

Bacon's hopes for them. Scientists don't need Bacon to tell them how

to think. And they didn't in 1600. What it seems philosophers did need

to be told, or at any rate what is arguably Bacon's key contribution,

is captured in his criticism of prior views about the ends,

that is purposes, of knowledge. He says that philosophy was considered

"... a couch whereupon to rest a searching and restless spirit; or a

terrace for a wandering and variable mind to walk up and down with a

fair prospect; or a tower of state for a proud mind to raise itself

upon; or a fort or commanding ground for strife and contention; or a

shop for profit or sale; and not a rich storehouse for the glory of

the Creator and the

relief of

man's

estate."27

For the schoolmen and generations of philosophers before them, all the

way back to Aristotle, true learning was for the development of the

mind, the moral fiber, and the upright citizen, not for practical

everyday provisions. The Christianized version was, everyone agreed, for the

glory of the Creator. Bacon's innovation was that science must also be

for the relief of man's estate; that it must be practical. This

insistence on the practical transformed speculative philosophy into

natural science. Macaulay's summary is this.

What Bacon did for inductive philosophy may, we think, be fairly

stated thus. The objects of preceding speculators were objects which

could be attained without careful induction. Those speculators,

therefore, did not perform the inductive process carefully. Bacon

stirred up men to pursue an object which could be attained only by

induction, and by induction carefully performed; and consequently

induction was more carefully performed. We do not think that the

importance of what Bacon did for inductive philosophy has ever been

overrated. But we think that the nature of his services is often

mistaken, and was not fully understood even by himself. It was not by

furnishing philosophers with rules for performing the inductive

process well, but by furnishing them with a motive for performing it

well, that he conferred so vast a benefit on society.

What Bacon was advocating was knowledge that led to what we would call

today

technology. This emphasis has drawn the

fire of a school of modern critics of science as a whole (part of the

Science Studies movement) whose argument is

that science is not so much about knowledge as it is about

power. Despite Francis Bacon's many failings of legal and personal

integrity, there seems little reason to question the sincerity of his

avowedly humanitarian motivation towards practical knowledge. He lived

in the court of monarchic power and rose to become the most powerful

judge in England before his conviction for corruption and bribery. So

he was no naive idealist, and is a natural target for the suspicions

of the science critics. But he was at the same time a convinced

Christian who would not have been insensitive to the appeal of a

philosophy motivated by practical

charity, especially

since it supported the escape from

scholasticism

that he also yearned for. Whatever may have been the sincerity of

Bacon's theological arguments in favor of practical knowledge, there

can be little doubt that they furnished a persuasive rationale that

helped to establish the course of modern science, and that persists

today.

Technology demands reproducibility. Technology has to be based upon a

reliable response in the systems that it puts into

operation. Technology seeks to be able to

manipulate the world in predictable ways. The

knowledge that gives rise to useful technology has to be knowledge

about the world in so far as it is reproducible and gives rise to

tangible effects. These are precisely the characteristics of modern

science.

When we talk about experiments, however, we normally conjure up

visions of laboratories with complicated equipment and studious,

bespectacled, possibly white-coated scientists; not a day at the

beach. The practical experimentation at the beach which is our symbol

for the acquisition of everyday knowledge does not draw strong

distinctions between the levels of confidence with which we expect the

world to follow our plans. At any time we hold to a vast array of

beliefs with a wide spectrum of certainties from tentative hypothesis

to unshakable conviction; and most often we draw little conscious

distinction between them. In most cases, we have no opportunity to do

so, since the press of events obliges us every moment to make

decisions about our conduct based on imperfect and uncertain

knowledge. Establishing confidence in reproducible knowledge, certain

enough for practical application, and meeting our expectations for

natural science, requires a more deliberate approach to

experimentation. This (capitalized)

Experiment is a

formalization of the notion of reproducibility.

A formal Experiment is generally conducted in the context of some

already-articulated theoretical expectation. It can be considered the

opposite end of a spectrum of different degrees of deliberateness in

experimentation. The idle play of the beach is the other end. And in

between are random exploratory investigations, fact and specimen

gathering, systematic documentation and measurement, the trial and

error of technique development and instrumentation, and the

elimination of spurious ideas and mistakes.

At its purest an Experiment is devised specifically to test a

theoretical model or principle. Isaac Newton's famous demonstration

that white light is in fact composed of a spectrum of light of

different colors is often cited as an illustration of experimental

investigations leading up to a "crucial experiment". In his letter

to the Royal Society of February 1672 he relates in a personal

story-telling style his initial experiments with the refraction of

light through a prism, and his demonstration by careful measurement

that the greater than two degree spread of the refracted colors could

not be caused by the angular size of the sun's disc. He talks about

various ideas he ruled out as possible explanations of the

observations and then says:

28

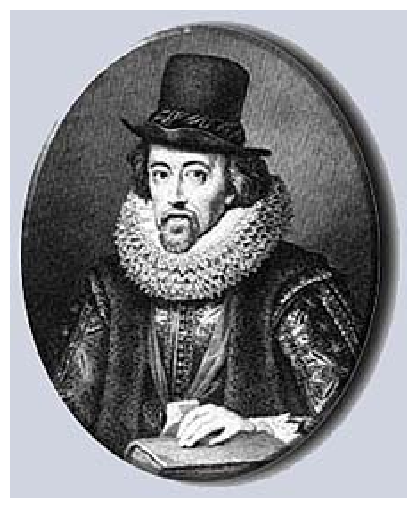

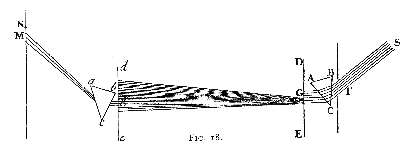

Figure 2.3: Newton's drawing of his Experimentum Crucis, published much

later in his Opticks (1704)

.

The gradual removal of these suspitions, at length led me to the

Experimentum Crucis, which was this: I took two boards, and placed one

of them close behind the Prisme at the window, so that the light might

pass through a small hole, made in it for the purpose, and fall on the

other board, which I placed at about 12 feet distance, having first

made a small hole in it also, for some of that Incident light to pass

through. Then I placed another Prisme behind this second board, so

that the light, trajected through both the boards, might pass through

that also, and be again refracted before it arrived at the wall. This

done, I took the first Prisme in my hand, and turned it to and fro

slowly about its Axis, so much as to make the several parts of the

Image, cast on the second board, successively pass through the hole in

it, that I might observe to what places on the wall the second Prisme

would refract them. And I saw by the variation of those

places, that the light, tending to that end of the Image, towards

which the refraction of the first Prisme was made, did in the second

Prisme suffer a Refraction considerably greater then the light tending

to the other end. And so the true cause of the length of that Image

was detected to be no other, then that Light consists of Rays

differently refrangible, which, without any respect to a difference in

their incidence, were, according to their degrees of refrangibility,

transmitted towards divers parts of the wall29.

Even this report and Newton's conclusions were not without

controversy. Others seeking to reproduce his results observed

different dispersions of the light, presumably because of using prisms

with different angles. There followed a correspondence lasting some

years, but in a remarkably short time the acceptance of this

demonstration became practically universal because the key qualitative

features, and by attention to the full details even the quantitative

aspects, could be reproduced at will by experimenters with only a

moderate degree of competence.

2.2 Is reproducibility really essential to science?

Observational Science

Most thoughtful people recognize the crucial role that repeatable

experiments play in the development of science. Nevertheless, there

arises, an important objection to the view that science is utterly

dependent on reproducibility for its operation. The objection

is this. What about a discipline like astronomy? The heavenly bodies

are far outside our reach. We cannot do experiments on them, or at

least we could not in the days prior to space travel and we still

cannot for those at stellar distances. Yet who in their right mind

would deny to astronomy the status of science?

Or consider the early stages of

botany or

zoology. For centuries, those disciplines consisted

largely of systematic gathering of samples of species; cataloging and

classifying them, not experimenting on them. Of

course today we do have a more fundamental understanding of the

cellular and molecular basis of living organisms, developed in large

part from direct manipulative experiments. But surely it would be pure

physicist's arrogance to say that botany or zoology were not, even in

their classification stages, science.

In short, what about observational sciences? Surely it must be

granted that they are science. If they are exceptions to the principle

that science requires reproducibility then that principle rings

hollow.

Some commentators find this critique so convincing, that they adopt a

specialized expression to describe the type of science that is

based on repeatable experiments. They call it

"Baconian Science". The point of this

expression is to suggest that there are other types of science than

the Baconian model. What I suppose people who adopt this designation

have in mind is observational sciences. They think that observational

sciences, in which we can't perform experiments on the phenomena of

interest at will, don't fit the model of reproducibility. There is

some irony in using the expression with this meaning, since actually

Bacon was at great pains to emphasize the systematic gathering of

observations, without jumping to theoretical conclusions, so he

certainly did not discount observational science, even though he did

emphasize the motivation of practicality.

However, we need to think carefully whether observational sciences are

really exceptions to reproducibility. Let us first consider

astronomy. It is an appropriate first choice because

in many ways astronomy was historically the first science. Humans

gazed into the heavens and pondered on what they saw. The Greeks had

extensive knowledge of the constellations and their cycles. And it was

the consideration of the motions of the planets, more than anything

else that led to the Newtonian synthesis of gravity and dynamics. But

astronomy, considered in its proper historical context, is not an

exception to the scientific dependence on reproducibility. Far from

it. Astronomy was for the pre-industrial age the archetype of

reproducibility. It was just because the heavens showed remarkable

systematically repeated cycles that it commanded the attention of so

many philosophers in attempts to explain the motions of the heavenly

bodies. It was because the repeatability gave astronomers the ability

to predict with amazing precision the phenomena of the

heavens that astronomy appeared almost mystical in its

status.

What is more, the independence of place and

observer

was satisfied by astronomy with superb accuracy. Better than almost

all other phenomena, the sky looks the same from where ever you see

it. As longer distance travel became more commonplace, the systematic

changes of the appearance of the heavens with global position

(latitude for example) were soon known and relied upon for

navigation. And what could be more common to the whole of humanity

than the sky?

Far from being an exception to the principle of reproducibility,

astronomy's success depends upon that principle. Astronomy

insists that all observers are going to see consistent pictures of the

heavens when they observe. Those observations are open to all to

experience (in principle). And those observations can be predicted

ahead of time with great precision.

One way to highlight the importance of reproducibility in the context

of astronomy is to contrast Astronomy (the scientific study of

the observational universe) with

Astrology

(the attempt to predict or explain human events from the

configurations of the heavenly bodies). Many people still follow

assiduously their daily horoscope. Regarded as cultural tradition,

that is probably no more harmful than wondering, on the feast of

Candlemas, if the groundhog Puxatawney Phil saw his shadow; and

recalling that if so, by tradition there will be six more weeks of

winter. Astrology, for most people, is a relatively harmless cultural

superstition. But surely no thinking person today would put forward

astrology as a science. Its results are not reproducible. Its

predictions appear to have no value beyond those of common sense. And

its attempts to identify shared particular characteristics of people

born in certain months simply don't give reliable results. Once upon a

time there was little distinction between astronomy and

astrology. Their practice in the pre-scientific age seems to be a

confusing mix of the two. A major success of the scientific revolution

was the disentangling of astronomy and astrology. The most important

principle that separates the two activities is that astronomy is

describing, systematizing and ultimately explaining the observations

of the heavens in so far as they are reproducible and clear to all

observers.

There are, of course, unique phenomena in

astronomy.

Supernovae, for example, each have unique

features, and are first observed on a particular date. In that sense

they are observations of natural history. On 4th July 1054,

astronomers in China first observed a new star in the constellation of

Taurus. Its brightness grew visibly day by day. During its three

brightest weeks it was reported as visible in daylight, four times

brighter than the evening star (Venus). It remained visible to the

naked eye for about two years. It is thought that Anastasi Indian art

in Arizonan pictographs also records the event. Surprisingly, there

seem to be no European records of the event that have

survived.30

If this were the only supernova ever observed, then we would probably

be much more reticent to regard the event with credence. But there are

approximately twenty different recorded supernovae (or possibly novae)

in our galaxy during the 2000 years before 1700. And with modern

telescopes, supernovae in other

galaxies can also be observed fairly frequently.

The SN1054 supernova is probably the best known because it gave rise

to the beautiful

Crab Nebula discovered in

1731. That gas in the Nebula is expanding was established in the early

20th century by observing the line splitting caused by the Doppler

effect. The nearer parts of the Nebula are moving towards us and the

further parts away from us. In 1968 a new type of pulsing radio

emission was discovered coming from the center of the Nebula. This

Crab Pulsar is also observable in the visible spectrum. It is now

known to be an extremely compact neutron star, rotating at an

astonishing 30 times per second.

31

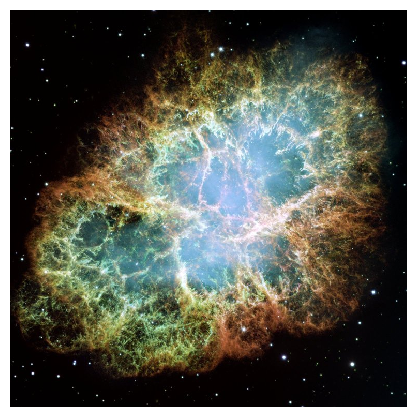

Figure 2.4: Photograph by the Hubble Telescope of the Crab

Nebula.

One can get readily accessible reproducible evidence of the date of

the SN1054 supernova. The expansion rate of the Crab Nebula can be

established by comparing photographs separated in time. One can then

extrapolate that expansion backward and discover when the

now-expanding rim must have been all together in the local

explosion. This process, applied for example by an undergraduate at

Dartmouth College to photographs taken 17 years apart, gives a date in

the middle of the 11th century. In 100% agreement with the historic

record.32 [For technical precision we should note that, since the nebula

is 6000 light years away, the explosion and the emission of the light

we now see took place 6000 years earlier.]

Notice the following characteristics. First the Crab Nebula's

supernova, though having its own unique features, was an event of a

type represented by numerous other examples. Second, the event itself

was observable to, and recorded by, multiple observers. Third, the

supernova left long-lived evidence that for years could be seen by

anyone who looked, and still gives rich investigation

opportunities to experts from round the world, who simply have to

point their telescope in the right direction. These are the

characteristics of reproducibility in the observational sciences.

What about botany and zoology, in their collection and classification

stages? Again, careful consideration convinces us that these do rely

on reproducibility. If only a single

specimen is

available, it remains largely a curiosity. Who is to say that this not

simply some peculiar mutant, or even a hoax? But when multiple similar

specimens are found, then it is possible to detect what is common to

all specimens and to discount as individual variation those

characteristics that are not. Indeed, in the life sciences the best

option is to have breeding specimens, which guarantee the ability to

establish new examples for which the reproducible characteristics are

those on which the scientific classification is based.

All right, what about

geology then? Its specimens don't

reproduce. But again the observation of many different examples of the

same types of rock, or formation, or other phenomenon, is essential to

its scientific progress. In its earliest stages, before geophysics had

more direct physical descriptions of its processes, geology progressed

as a science largely by the identification of multiple examples of the

same processes at work, that is by reliance on repeatability. As the

scientific framework for understanding the earth's formation was

gradually built up, the systematic aspects of the rock formations

began to become clear. Events could be correlated to produce an

ordered series of

ages. Then additional

techniques such as radioactive dating

enabled geologists to assign a quantitative date to the different

geological ages, based on multiple assessments of the time that must

have elapsed since the formation of rocks identified as belonging to

each period. All of this process requires the ability to make multiple

observations, observe repeatable patterns, and perform repeatable

physical tests on the samples.

So observational science requires multiple repeatable examples

of the phenomenon or specimen under consideration. It does not require

that these can be produced at will in the way that a laboratory

experiment can in principle be performed at any hour on any day.

Observations may be constrained by the fact that the examples of

interest occur only at certain times (for example eclipses) or

in certain places (for example in specific habitat), over which

we might have little or no control. But it does require that multiple

examples exist and can be observed.

Figure 2.4: Photograph by the Hubble Telescope of the Crab

Nebula.

One can get readily accessible reproducible evidence of the date of

the SN1054 supernova. The expansion rate of the Crab Nebula can be

established by comparing photographs separated in time. One can then

extrapolate that expansion backward and discover when the

now-expanding rim must have been all together in the local

explosion. This process, applied for example by an undergraduate at

Dartmouth College to photographs taken 17 years apart, gives a date in

the middle of the 11th century. In 100% agreement with the historic

record.32 [For technical precision we should note that, since the nebula

is 6000 light years away, the explosion and the emission of the light

we now see took place 6000 years earlier.]

Notice the following characteristics. First the Crab Nebula's

supernova, though having its own unique features, was an event of a

type represented by numerous other examples. Second, the event itself

was observable to, and recorded by, multiple observers. Third, the

supernova left long-lived evidence that for years could be seen by

anyone who looked, and still gives rich investigation

opportunities to experts from round the world, who simply have to

point their telescope in the right direction. These are the

characteristics of reproducibility in the observational sciences.

What about botany and zoology, in their collection and classification

stages? Again, careful consideration convinces us that these do rely

on reproducibility. If only a single

specimen is

available, it remains largely a curiosity. Who is to say that this not

simply some peculiar mutant, or even a hoax? But when multiple similar

specimens are found, then it is possible to detect what is common to

all specimens and to discount as individual variation those

characteristics that are not. Indeed, in the life sciences the best

option is to have breeding specimens, which guarantee the ability to

establish new examples for which the reproducible characteristics are

those on which the scientific classification is based.

All right, what about

geology then? Its specimens don't

reproduce. But again the observation of many different examples of the

same types of rock, or formation, or other phenomenon, is essential to

its scientific progress. In its earliest stages, before geophysics had

more direct physical descriptions of its processes, geology progressed

as a science largely by the identification of multiple examples of the

same processes at work, that is by reliance on repeatability. As the

scientific framework for understanding the earth's formation was

gradually built up, the systematic aspects of the rock formations

began to become clear. Events could be correlated to produce an

ordered series of

ages. Then additional

techniques such as radioactive dating

enabled geologists to assign a quantitative date to the different

geological ages, based on multiple assessments of the time that must

have elapsed since the formation of rocks identified as belonging to

each period. All of this process requires the ability to make multiple

observations, observe repeatable patterns, and perform repeatable

physical tests on the samples.

So observational science requires multiple repeatable examples

of the phenomenon or specimen under consideration. It does not require

that these can be produced at will in the way that a laboratory

experiment can in principle be performed at any hour on any day.

Observations may be constrained by the fact that the examples of

interest occur only at certain times (for example eclipses) or

in certain places (for example in specific habitat), over which

we might have little or no control. But it does require that multiple

examples exist and can be observed.

Randomness

A second important objection to the assertion that science requires

reproducibility concerns the occurrence in science of phenomena that

are random. By definition, such events are not predictable, or

reproducible, at least as far as their timing is concerned.

If science is the study of the world in so far as it is reproducible,

how come

probability, the mathematical embodiment

of randomness, the ultimate in non-reproducibility, plays such a

prominent role in modern physics? Here, I think, it is helpful to take

for a moment a historical perspective of science.

Pierre Laplace is famous (amongst other things) for his

encounter with the emperor

Napoleon. Laplace explained

to him his

deterministic understanding of nature.

Bonapart is reported to have asked, "But where in this scheme is the

role for God", to which Laplace's response was "I have no need of

that hypothesis"

. Regardless of his theological

position, Laplace's philosophical view of science was rather

commonplace for his age. It was that science was in the process of

showing that the world is governed by a set of deterministic

equations. And if one knew the initial conditions of these equations

for all the particles in the universe, one could, in principle at

least, solve those

equations and thereby predict, in

principle to arbitrary accuracy, the future of everything. In other

words, Laplace's view, and that of probably the majority of scientists

of his age, was that science was in the business of explaining the

world as if it were completely predictable, subject to no randomness.

This view persisted until the nineteen twenties, when the formulation

of

quantum mechanics shocked the world of

science by demonstrating that the many highly complicated and specific

details of atomic physics could be unified, explained, and predicted

with high accuracy using a totally new understanding of reality. At

the heart of this new approach was a concession that at the atomic

level events are never deterministic; they are always predictable only

to within a significant uncertainty.

Heisenberg's

uncertainty principle

is the succinct formulation of that realization. Quantum

mechanics possesses the mathematical descriptions to calculate

accurately the probability of events but not to predict them

individually in a reproducible way. Perfect reproducibility, it seems,

exists only as an ideal, and that ideal is approached only at the

macroscopic

scale of billions of atoms, not at the microscopic scale of single atoms.

Where does that leave the view that science demands reproducibility?

Did 20th century science in fact abandon that principle?

It seems clear to me that science has not at all abandoned the

principle. The principle is as intact as ever that science describes

the world in so far as it is reproducible. For hundreds of years,

science pursued the task with spectacular success. Quantum mechanics,

shocking though it seemed and still seems, did not halt or even alter

the basic drive. What it did was to show that the process of

describing the world in reproducible terms appears to have

limits,

fundamental limits, that are built into the fabric of the

universe. The quantum picture accepts that there are some boundaries

beyond which our reproducible knowledge fails in principle (not just

for technical reasons). Even as innovative a scientist and supple a

philosopher as

Albert

Einstein was repelled by the prospect, famously

resisting the notion that the randomness of the world is in principle

impenetrable with his comment

"God does not play dice". Einstein,

like scientists before and after him was committed to discovering the

world in so far as it is reproducible, not arbitrary. The overwhelming

opinion of physics today, though, is that Einstein was wrong in this

epigram. God does play dice, in the sense that some things are simply

irreproducible

.

But that does not stop science from proceeding to explore what is

measurable and predictable. Science does that first by pressing up to

the limits of what is reproducible. If individual events are not

predictable, it calculates the probabilities of events. Quantum

theory provides this reproducible measure in so far as reproducibility

exists. The interesting thing about Quantum mechanics is that it is

governed by deterministic equations. These equations are named after

the great physicists

Erwin Schrödinger and

Paul Dirac. A problem in Quantum

mechanics can be solved by finding a solution of these

equations. The equations take an initial state of the system and then

predict the entire future evolution of that state from that time

forward. This is such a

deterministic process that

some people argue that Quantum mechanics does not undermine

determinism. But such a view misses the key point. The function that is solved

for and the future system state that is predicted are no longer the

definite position or velocity of a particle, or one of the many other

quantities that we are familiar with in classical dynamics (or the

everyday world). Instead it is essentially the probability of a

particle being in a certain position or having a certain

velocity. This is an example of science pressing up against the

limits of reproducibility. The world

is not completely predictable even in principle by mathematical

equations, but science wants to describe it as completely as possible,

to the extent that it is. So when up against the non-predictability,

science invokes deterministic mathematics, but uses the mathematics to

govern just the probability of the occurrence of events. Probability

is, in a sense, the extent to which random events display

reproducibility. Science describes the world in terms of reproducible

events to the extent that it can be described that way.

And science proceeds, second, by pressing on into the

other areas of scientific investigation that still lie open to

reproducible description: describing and understanding them as far as

they are indeed reproducible.

The history of nature

A third important challenge to the principle of reproducibility lies

in the types of events that are inherently unique. How could we

possibly have knowledge of more than one universe? Therefore how can

reproducibility be a principle applied to the Big Bang origin of the

universe? Or, perhaps less fundamentally, but probably just as

practically, how can we apply principles of repeatability to the

origin of life on earth, or to the details of how the earth's species

got here?

I think these issues all boil down to the question "What about

natural history?" Is the history of nature part of science?

It is helpful to think first about the ways that science can tell us

about the past. Today, our ability to analyze human

DNA has

become extremely important in legal

evidence. It can help prove or disprove the

involvement of a particular person in a crime under investigation. The

high profile cases tend, of course, to be capital cases, but

increasingly this type of forensic evidence is decisive in a wider

variety of situations. Obviously this is an example of a way in which

scientific evidence is extremely powerful in telling us something

about history - not as overwhelming as it is often portrayed in the

popular TV series such as CSI, but still very powerful. Important as

this evidence may be, it still depends on the rest of the context of

the case which is what determines the significance of the DNA test

results. Moreover, the laboratory results themselves are rarely

totally unimpeachable. We have to be sure that the sample really came

from the place the police say it did. We have to be sure it was not

tampered with before it got to the lab or while it was there. We have

to be sure that the results are accurately reported, and so on. So

science can provide us with highly persuasive evidence, in part

because of its clarity and the difficulty of faking it. But when we

adduce scientific evidence for specific unique events of history (even

recent history) our confidence is, in principle, less than if we had

access to multiple examples of the same kind of phenomenon.

To illustrate that difference in levels of confidence, ask yourself

how successful a defense lawyer would likely be if they tried to

defend against DNA evidence by arguing that it is a scientific fallacy

that DNA is unique to each individual. Trying to impeach the

principles of science would be a ridiculously unconvincing way to try

to discredit evidence in a criminal case. Those principles have been

established by innumerable laboratory tests by independent

investigators over years of experience and subjected to intense

scrutiny by experts. General principles of science, such as the

uniqueness of DNA, need more compelling investigation and

evidence to establish them than we require for everyday events. But

they get that investigation, and the support of the wider fabric of

science into which they are woven. So, once established, we grant them

a much higher level of confidence. What's more, if there is reason to

doubt them, we can go back and get some more evidence to resolve the

question.

But notice that our confidence in scientific principles is not the

same as confidence in knowledge of the particular historical

question. We are confident that insofar as the world is reproducible,

and can thus be scientifically described, DNA is unique to the

individual. But that does not automatically decide the legal case. If

in fact there is other extremely compelling evidence that contradicts

the DNA evidence, we are likely to conclude that in this

specific instance there is something that invalidates the DNA

evidence and we should discount it.

In summary, then, for specific unique events of history, evidence

based on scientific analysis can be important, but is not uniquely

convincing.

However, even though it contains some questions about unique events,

much of natural history is not of this type. Much is about the broad

sweep of development of the universe, the solar system, the planet, or

the earth's creatures. In other words, questions of natural history

are usually about generalities, not particularities, about issues

giving rise to repeated observational examples, not single

instances. For these generalities, science is extremely powerful, but

continues to rely upon its principles of reproducibility and clarity.

We believe the

universe is expanding from an initial

event, starting some 13 billion years ago, because we can observe the

Doppler shifts of characteristic radiation

lines that are emitted from

objects over an enormous range of distances from our

solar system. We

see that the farther away the object is, the more rapidly it is

receding from us, as demonstrated by the frequency downshift of the

light we see. Anyone, anywhere can observe these sorts of effects with

telescopes and instruments that these days are relatively

commonplace. The observed systematic trend is consistent with a

more-or-less uniform expansion of the universe. If we project back the

tracks of the expansion, by mentally running the universe in reverse,

we find that our reversing tracks more-or-less bring all the objects

together at the same time: the time that we identify as the Big Bang,

or the age of the universe. This picture is confirmed by thousands of

independent observations of different stars and astrophysical

objects. Thus, the

Big Bang theory of the origin of

the universe is a

generality: that the universe had a beginning roughly 13 billion years

ago, when all of the objects we can see were far closer together. And

it is a generality confirmed by many observational examples that show

the same result.

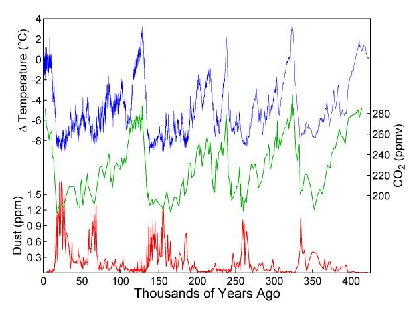

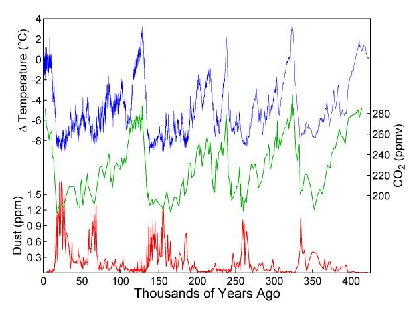

Figure 2.5: Temperature, atmospheric carbon-dioxide, and dust record from

the ice cores from Vostok, Antarctica.

What about earth's history? The history of the earth's

climate is a topic of great current

interest. How do we know what has happened to the climate in past

ages? This information comes in all sorts of ways from tree rings to

paleontology. Perhaps the most convincing and detailed information

comes from various types of "cores" sampled from successively

deposited strata. Examples include

ice cores, and

ocean sediments. Ice

cores as long as three kilometers, covering the last three quarters of

a million years

have been drilled from the built-up ice arising

from annual snowfall in

Antarctica.

The resulting history of the

climate is laid out in amazing detail. By analyzing the fraction of

different isotopes of hydrogen and oxygen in the water, scientists can

estimate the mean temperature. By analyzing trapped gases, the

fraction of carbon dioxide can be determined, and by observations of

included microscopic particles, the levels of dust in the atmosphere

can be documented, in each case over the entire history represented by

the core. Figure 2.5 illustrates the results from the core

analyzed in 1999.33

These results are reproducible. If a core is drilled in the same

place, the results one gets are the same. Actually, the longer (3km)

core was drilled more recently (2004) in a different place in

Antarctica than the one I have illustrated,34

but shows almost exactly the same results for the periods of history

that overlap. Moreover, deep-sea-bed sediment cores from all over the

globe show global ice mass levels deduced from oxygen isotopes that

correlate extremely well with the ice-core data for their history, but

stretch back to 5 million years ago. The rapid progress in these

observations and analysis over the past decade has enabled us to build

up a remarkable record of the climatic history of the earth. It

enables us to construct well-informed theories for what factors

influence the different aspects of climate, and to say how the climate

varied through time. But these vital additions to our knowledge are

still about broad generalities: the global or regional climate,

not usually the highly specific questions that preoccupy historians.

For example, the uncertainties in the exact age of the different

ice-core samples can be as large as a thousand years or more. In the

scheme of the general picture of what happened in the last million

years, this is a negligible uncertainty; but on the timescale of human

lives (for example) it is large.

Figure 2.5: Temperature, atmospheric carbon-dioxide, and dust record from

the ice cores from Vostok, Antarctica.

What about earth's history? The history of the earth's

climate is a topic of great current

interest. How do we know what has happened to the climate in past

ages? This information comes in all sorts of ways from tree rings to

paleontology. Perhaps the most convincing and detailed information

comes from various types of "cores" sampled from successively

deposited strata. Examples include

ice cores, and

ocean sediments. Ice

cores as long as three kilometers, covering the last three quarters of

a million years

have been drilled from the built-up ice arising

from annual snowfall in

Antarctica.

The resulting history of the

climate is laid out in amazing detail. By analyzing the fraction of

different isotopes of hydrogen and oxygen in the water, scientists can

estimate the mean temperature. By analyzing trapped gases, the

fraction of carbon dioxide can be determined, and by observations of

included microscopic particles, the levels of dust in the atmosphere

can be documented, in each case over the entire history represented by

the core. Figure 2.5 illustrates the results from the core

analyzed in 1999.33

These results are reproducible. If a core is drilled in the same

place, the results one gets are the same. Actually, the longer (3km)

core was drilled more recently (2004) in a different place in

Antarctica than the one I have illustrated,34

but shows almost exactly the same results for the periods of history

that overlap. Moreover, deep-sea-bed sediment cores from all over the

globe show global ice mass levels deduced from oxygen isotopes that

correlate extremely well with the ice-core data for their history, but

stretch back to 5 million years ago. The rapid progress in these

observations and analysis over the past decade has enabled us to build

up a remarkable record of the climatic history of the earth. It

enables us to construct well-informed theories for what factors

influence the different aspects of climate, and to say how the climate

varied through time. But these vital additions to our knowledge are

still about broad generalities: the global or regional climate,

not usually the highly specific questions that preoccupy historians.

For example, the uncertainties in the exact age of the different

ice-core samples can be as large as a thousand years or more. In the

scheme of the general picture of what happened in the last million

years, this is a negligible uncertainty; but on the timescale of human

lives (for example) it is large.

2.3 Inherent limitations of reproducibility

We have seen some examples of the great power of science's reliance

upon reproducibility to arrive at knowledge. These examples are not

intended to emphasize science's power; such a demonstration would

be superfluous for most modern minds. They are to show the importance

of reproducibility. We are so attuned to the culture of science that

we generally take this reproducibility for granted. But we must now

pause to recollect both that this reproducibility is not obvious in

nature, and that in many fields of human knowledge the degree of

reproducibility we require in science is absent.

Of course, substantial regularity in the natural world, and indeed in

human society, was as self-evident to our ancestors as it is to

us. But the extent to which precise and measurable reproducibility

could be discovered and codified was not. The very concept and

expression

`law of nature' dates back only to the

start of the scientific revolution, to Boyle and Newton. And in its

original usage, it intended as much the judicial meaning of a

legislated edict of the

Creator as the impersonal

physical principle, or force of nature that now comes to mind. Indeed,

a case can be made that it was in substantial degree the expectation

that law governed the natural world, fostered by a theology of God as

law-giver, that provided the fertile intellectual

climate for the growth of science. As late as the nineteenth century,

Faraday motivated his search for unifying principles, and explained

his approach to scientific investigation, by statements like "God has

been pleased to work in his material creation by laws". By referring

to God's pleasure, Faraday was not in the least intending to be

metaphorical, and by laws he meant something probably much less

abstract than would be commonplace today.

In drawing attention now to disciplines in which the reproducibility

expected in science is absent, I want to start by reiterating

that this absence does not in my view undermine

their ability to provide real knowledge. The whole point of my

analysis is to assert that non-scientific knowledge is real and

essential. So I beseech colleagues from the disciplines I am about to

mention to restrain any understandable impulse to bristle at the

charge that their disciplines are not science. I remind you that I am

using the word science to mean natural science, and the techniques

that it depends upon. If the semantics is troubling, simply insert the

qualification "natural" in front of my usage. Let us also stipulate

from the outset that there are parts of each of these

disciplines that either benefit from scientific techniques or indeed

possess sufficient reproducibility to be scientifically analyzed. I am

not at all doubting such a possibility. I am simply commenting that

the core subject areas of these disciplines are not most fruitfully

studied in this way for fundamental reasons to do with their content.

In his (1997) graduate text

Science

Studies, introducing various philosophical and sociological

analysis of science, David J Hess acknowledges without hesitation the

difficulties in applying scientific analysis to other disciplines

"Probably the greatest weakness in this position comes when the

philosophy of science is generalized from the natural sciences to the

human sciences". He says specifically "Many social phenomena are far

too complicated to be predictable".35

In other words, in my terminology, these phenomena are not

science. Yet a few pages later he says. "One of the reasons social

scientists lose patience with philosophers of science is that we are

constantly told that we are in some sense deficient scientists - we

lack a paradigm, predictive ability, quantitative exactness, and so on

- instead of being seen as divergent or different

scientists".36 This is an argument about

titles and semantics. Sociologists today acknowledge that sociology

does not offer the kind of reproducibility that is characteristic of

the natural sciences. They feel they must insist on the title of

science, which I believe is because of the scientism of the age;

without the imprimatur of the title they feel their discipline is in

danger of being dismissed as non-knowledge. Yet they resent it when

the essential epistemological differences between their field and

science are pointed out. No wonder there are difficulties in this

discussion. As a physical scientist, I need to keep out of this

argument, but I will observe that if we disavow scientism, then the

whole of this discussion becomes more tractable. It is no longer a

problem for sociology to be recognized as a field of knowledge in

which reproducibility is not available.

History is a field in which there is thankfully less

resentment towards an affirmation that it is not science. Obviously

history, more often than not, is concerned with unique events in the

past that cannot be repeated. Here is a commentary by a historian on

King James the Second's frequent remark to justify his intransigence:

"My father made concessions and he was beheaded".

Macaulay writes, "Even if it had been true that concession

had been fatal to Charles the First, a man of sense would have

remembered that a single experiment is not sufficient to establish a

general rule even in sciences much less complicated than the science

of government; that, since the beginning of the world, no two

political experiments were ever made of which all the conditions were

exactly alike ..."37 Macaulay's

typical, but confusing, use of `science' has already been noted, but

the point he makes very clearly is that there is no reproducibility in

history. No more than a small fraction of its concerns benefit from

analysis that bears the stamp of natural science. Yet no thoughtful

person would deny that historical knowledge is true knowledge, that

history at its best has high standards of scholarship and credibility,

and that the study of history has high practical and theoretical

value.

Similarly the study of the law,

jurisprudence, is

a field whose research and practice cannot be scientific because it is

not concerned with the reproducible. The circumstances of particular

events cannot be subjected to repeated tests or to multiple

observations. Moreover, the courts do not have the luxury of being

able indefinitely to defer judgement until sufficient data might

become available. They have to arrive at a judgement that is binding

on the protagonists even with insufficient data. Consequently, the

legal system's approach to decision-making is very different from

science's.

In Britain in the early 1980s the government of Prime Minister

Margaret Thatcher introduced policies in line with

Milton Friedman's economic theories, which the press was fond

of referring to as the

`monetarist

experiment'. Here was what an economist must surely dream about: the

chance to see an experimental verification of his theory. What was

the result of this experiment? Was monetarism thereby confirmed or

refuted? To judge by current economic opinion, it seems

neither. Economists don't really know how to assess the outcome

unambiguously, because this was a real economy with all sorts of

extraneous influences; and what is most important, one can't keep

trying repeatedly till one gets consistent results. It may have been

an experiment, but it was not truly a scientific

experiment.

Economics is an interesting case here,

because economists have large quantities of precise measured data and

usefully employ highly sophisticated mathematics for many of their

theories, a trait that they share with some of the hardest physical

scientists. Economics is a field of high intellectual rigor. But the

absence of an opportunity for truly reproducible tests or observations

and the impossibility of isolating the different components of

economic systems means that economics as a discipline is qualitatively

different from science.

Politics is to many a physical scientist baffling and

mysterious. Here is a field, if there ever was one, that is the

complete contradiction of what scientists seek in nature. In place of

consistency and predictability we find pragmatism and the winds of

public opinion. In place of dispassionate analysis, we have the power

of oratory. And once again, nothing in the least approximating the

opportunity for reproducible tests or observations offers itself to

political practitioners. It seems a great pity, and perhaps a sign of

wistful optimism, not to mention the scientism that is our present

subject, that the academic field of study is referred to these days

almost universally as

Political Science.

We will discuss more, equally important, examples of inherently

non-scientific disciplines later. But these suffice to illustrate that

not only is science not all the knowledge there is, it may not be even

the most important knowledge. And however much we might hope for

greater precision and confidence in the findings of the non-scientific

disciplines, it is foolishness to think they will ever possess the

kind of predictive power that we attribute to science. Their field of

endeavor does not lend itself to the epistemological techniques that

underlie science's reliable models and convincing proofs. They are

about more indefinite, intractable, unique, and often more human problems.

In short, they are not about nature.